All leaked interview problems are collected from Internet.

538. Convert BST to Greater Tree

Given a Binary Search Tree (BST), convert it to a Greater Tree such that every key of the original BST is changed to the original key plus sum of all keys greater than the original key in BST.

Example:

Input: The root of a Binary Search Tree like this:

5

/ \

2 13

Output: The root of a Greater Tree like this:

18

/ \

20 13

b'

Initial Thoughts

\nThis question asks us to modify an asymptotically linear number of nodes in a\ngiven binary search tree, so a very efficient solution will visit each node\nonce. The key to such a solution would be a way to visit nodes in descending\norder, keeping a sum of all values that we have already visited and adding\nthat sum to the node\'s values as we traverse the tree. This method for tree traversal is\nknown as a\nreverse in-order traversal, and allows us to guarantee visitation of each\nnode in the desired order. The basic idea of such a traversal is that before\nvisiting any node in the tree, we must first visit all nodes with greater\nvalue. Where are all of these nodes conveniently located? In the right\nsubtree.

\nApproach #1 Recursion [Accepted]

\nIntuition

\nOne way to perform a reverse in-order traversal is via recursion. By using\nthe call stack to return to previous nodes, we can easily visit the nodes in\nreverse order.

\nAlgorithm

\nFor the recursive approach, we maintain some minor "global" state so each\nrecursive call can access and modify the current total sum. Essentially, we\nensure that the current node exists, recurse on the right subtree, visit the\ncurrent node by updating its value and the total sum, and finally recurse on\nthe left subtree. If we know that recursing on root.right properly\nupdates the right subtree and that recursing on root.left properly updates\nthe left subtree, then we are guaranteed to update all nodes with larger values\nbefore the current node and all nodes with smaller values after.

Complexity Analysis

\n- \n

- \n

Time complexity : \n

\nA binary tree has no cycles by definition, so

\nconvertBSTgets called on\neach node no more than once. Other than the recursive calls,convertBST\ndoes a constant amount of work, so a linear number of calls toconvertBST\nwill run in linear time. \n - \n

Space complexity : \n

\nUsing the prior assertion that

\nconvertBSTis called a linear number of\ntimes, we can also show that the entire algorithm has linear space\ncomplexity. Consider the worst case, a tree with only right (or only left)\nsubtrees. The call stack will grow until the end of the longest path is\nreached, which in this case includes all nodes. \n

\n

Approach #2 Iteration with a Stack [Accepted]

\nIntuition

\nIf we don\'t want to use recursion, we can also perform a reverse in-order\ntraversal via iteration and a literal stack to emulate the call stack.

\nAlgorithm

\nOne way to describe the iterative stack method is in terms of the intuitive\nrecursive solution. First, we initialize an empty stack and set the current\nnode to the root. Then, so long as there are unvisited nodes in the stack or\nnode does not point to null, we push all of the nodes along the path to\nthe rightmost leaf onto the stack. This is equivalent to always processing\nthe right subtree first in the recursive solution, and is crucial for the\nguarantee of visiting nodes in order of decreasing value. Next, we visit the\nnode on the top of our stack, and consider its left subtree. This is just\nlike visiting the current node before recursing on the left subtree in the\nrecursive solution. Eventually, our stack is empty and node points to the\nleft null child of the tree\'s minimum value node, so the loop terminates.

Complexity Analysis

\n- \n

- \n

Time complexity : \n

\nThe key observation is that each node is pushed onto the stack exactly\nonce. I will take for granted the assumption that a node will always be\npushed at least once, as the alternative would imply that at least one\nnode is disconnected from the root. Notice that nodes are only pushed\nonto the stack when they are pointed to by

\nnodeat the beginning of the\nouterwhileloop, or when there is a path to them from such a node by\nusing onlyrightpointers. Then notice that at the end of each\niteration of the loop,nodepoints to the left child of a node that has\nbeen pushed onto (and subsequently popped from) the stack. Therefore,\nbecause the outerwhileloop always begins withnodepointing to\nNone, the root (which is not pointed to by any other node), or a left\nchild of a visited node, we cannot revisit nodes. \n - \n

Space complexity : \n

\nIf we assume that the above logic is sound, the assertion that each node is\npushed onto the stack exactly once implies that the stack can contain (at\nmost) nodes. All other parts of the algorithm use constant space, so\nthere is overall a linear memory footprint.

\n \n

\n

Approach #3 Reverse Morris In-order Traversal [Accepted]

\nIntuition

\nThere is a clever way to perform an in-order traversal using only linear time\nand constant space, first described by J. H. Morris in his 1979 paper\n"Traversing Binary Trees Simply and Cheaply". In general, the recursive and\niterative stack methods sacrifice linear space for the ability to return to a\nnode after visiting its left subtree. The Morris traversal instead exploits\nthe unused null pointer(s) of the tree\'s leaves to create a temporary link\nout of the left subtree, allowing the traversal to be performed using only\nconstant additional memory. To apply it to this problem, we can simply swap\nall "left" and "right" references, which will reverse the traversal.

Algorithm

\nFirst, we initialize node, which points to the root. Then, until node\npoints to null (specifically, the left null of the tree\'s minimum-value\nnode), we repeat the following. First, consider whether the current node has\na right subtree. If it does not have a right subtree, then there is no\nunvisited node with a greater value, so we can visit this node and move into\nthe left subtree. If it does have a right subtree, then there is at least one\nunvisited node with a greater value, and thus we must visit first go to the\nright subtree. To do so, we obtain a reference to the in-order successor (the\nsmallest-value node larger than the current) via our helper function\ngetSuccessor. This successor node is the node that must be visited\nimmediately before the current node, so it by definition has a null left\npointer (otherwise it would not be the successor). Therefore, when we first\nfind a node\'s successor, we temporarily link it (via its left pointer) to\nthe node and proceed to the node\'s right subtree. Then, when we finish\nvisiting the right subtree, the leftmost left pointer in it will be our\ntemporary link that we can use to escape the subtree. After following this\nlink, we have returned to the original node that we previously passed\nthrough, but did not visit. This time, when we find that the successor\'s\nleft pointer loops back to the current node, we know that we have visited\nthe entire right subtree, so we can now erase the temporary link and move\ninto the left subtree.

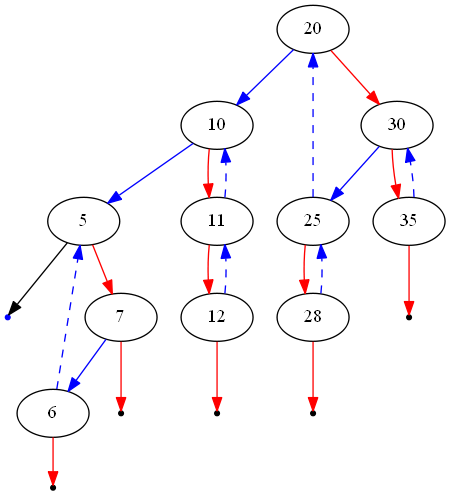

The figure above shows an example of the modified tree during a reverse\nMorris traversal. Left pointers are illustrated in blue and right pointers in\nred. Dashed edges indicate temporary links generated at some point during the\nalgorithm (which will be erased before it terminates). Notice that blue edges\ncan be dashed, as we always exploit the empty left pointer of successor\nnodes. Additionally, notice that every node with a right subtree has a link\nfrom its in-order successor.

Complexity Analysis

\n- \n

- \n

Time complexity : \n

\nAlthough the Morris traversal does slightly more work than the other\napproaches, it is only by a constant factor. To be specific, if we can\nshow that each edge in the tree is traversed no more than times (for\nsome constant ), then the algorithm is shown to have linear time\ncomplexity. First, note that

\ngetSuccessoris called at most twice per\nnode. On the first invocation, the temporary link back to the node in\nquestion is created, and on the second invocation, the temporary link is\nerased. Then, the algorithm steps into the left subtree with no way to\nreturn to the node. Therefore, each edge can only be traversed 3 times:\nonce when we move thenodepointer, and once for each of the two calls\ntogetSuccessor. \n - \n

Space complexity : \n

\nBecause we only manipulate pointers that already exist, the Morris\ntraversal uses constant space.

\n \n

\n

Analysis written by: @emptyset.

\n